Video extension

The video filters you created are easily integrated into apps to supply your voice effects and noise cancellation.

Understand the tech

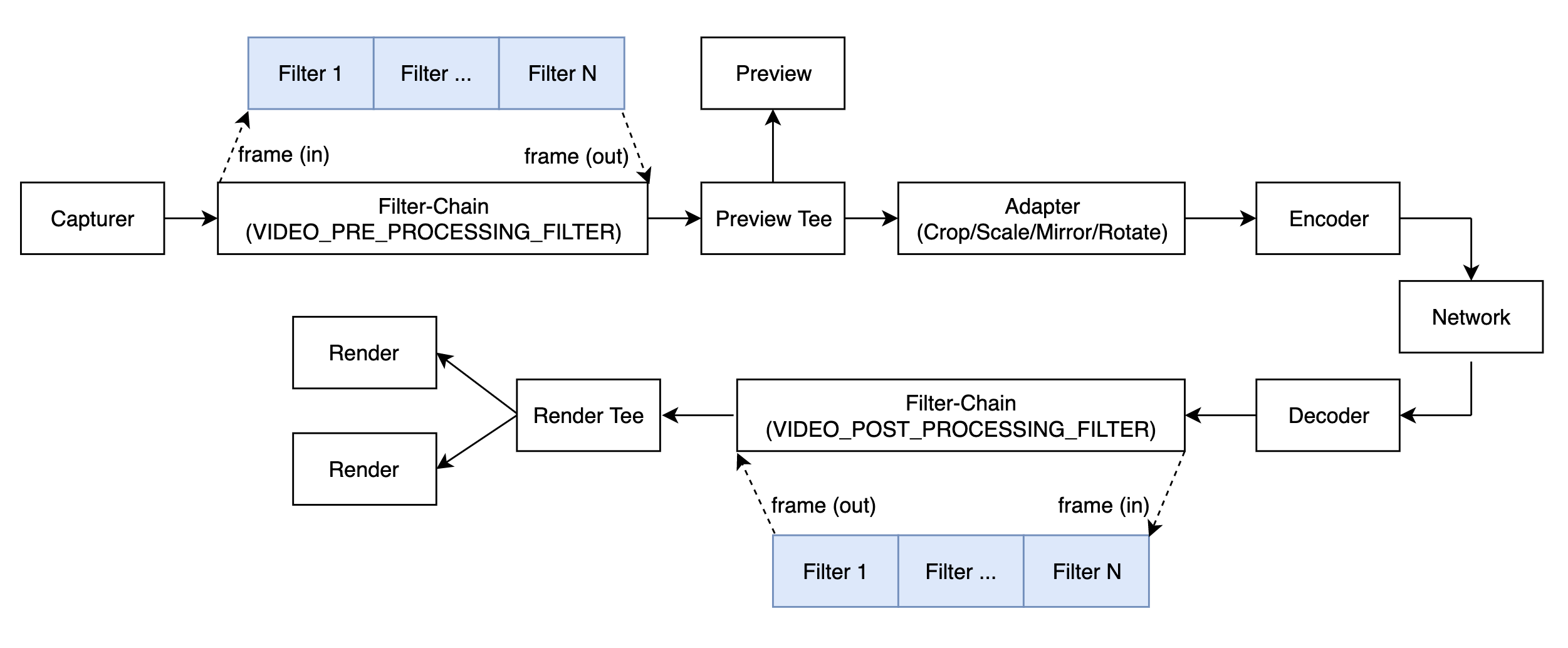

A video filter accesses video data when it is captured from the user's local device, modifies it, then plays the updated data to local and remote video channels.

A typical transmission pipeline consists of a chain of procedures, including capture, pre-processing, encoding, transmitting, decoding, post-processing, and play. In order to modify the voice or video data in the transmission pipeline, video extensions are inserted into either the pre-processing or post-processing procedure.

Prerequisites

In order to follow this procedure you must have:

- Android Studio 4.1 or higher.

- Android SDK API Level 24 or higher.

- A mobile device that runs Android 4.1 or higher.

- A project to develop in.

Project setup

In order to integrate an extension into your project:

To integrate into your project:

- Unzip Video SDK to a local directory.

- Copy the header files in

rtc/sdk/low_level_api/includeunder the directory of your project file.

You are now ready to develop your extension.

Create an video extension

To build a video filter extension, you use the following APIs:

IExtensionVideoFilter: This interface implements the function of receiving, processing, and delivering video data.IExtensionProvider: This interface encapsulates the functions inIExtensionVideoFilterinto an extension.

Develop a video filter

Use the IExtensionVideoFilter interface to implement an audio filter. You can find the interface in the NGIAgoraMediaNode.h file. You need to implement this interface first, and you must implement at least the following methods from this interface:

Methods include:

getProcessModestartstopgetVideoFormatWantedadaptVideoFramependVideoFramedeliverVideoFramesetPropertygetProperty

The following code sample shows how to use these APIs together to implement a video filter:

Encapsulate the filter into an extension

To encapsulate the video filter into an extension, you need to implement the IExtensionProvider interface. You can find the interface in the NGIAgoraExtensionProvider.h file. The following methods from this interface must be implemented:

The following code sample shows how to use these APIs to encapsulate the video filter:

Package the extension

After encapsulating the filter into an extension, you need to register and package it into a .aar or .so file, and submit it together with a file that contains the extension name, vendor name and filter name to Agora.

-

Register the extension

Register the extension with the macro

REGISTER_AGORA_EXTENSION_PROVIDER, which is in theAgoraExtensionProviderEntry.hfile. Use this macro at the entrance of the extension implementation. When the SDK loads the extension, this macro automatically registers it to the SDK. For example: -

Link the

libagora-rtc-sdk-jni.sofileIn

CMakeLists.txt, specify the path to save thelibagora-rtc-sdk-jni.sofile in the downloaded SDK package according to the following table:File Path 64-bit libagora-rtc-sdk-jni.soAgoraWithByteDanceAndroid/agora-bytedance/src/main/agoraLibs/arm64-v8a32-bit libagora-rtc-sdk-jni.soAgoraWithByteDanceAndroid/agora-bytedance/src/main/agoraLibs/arm64-v7a -

Provide extension information

Create a

.javaor.mdfile to provide the following information:EXTENSION_NAME: The name of the target link library used inCMakeLists.txt. For example, for a.sofile namedlibagora-bytedance.so, theEXTENSION_NAMEshould beagora-bytedance.EXTENSION_VENDOR_NAME: The name of the extension provider, which is used for registering in theagora-bytedance.cppfile.EXTENSION_FILTER_NAME: The name of the filter, which is defined inExtensionProvider.h.

Test your implementation

To ensure that you have integrated the extension in your app:

Once you have developed your extension and API endpoints, the next step is to test whether they work properly.

-

Functional and performance tests

Test the functionality and performance of your extension and submit a test report to Agora. This report must contain:

- The following proof of functionality:

- The extension is enabled and loaded in the SDK normally.

- All key-value pairs in the

setExtensionPropertyorsetExtensionPropertyWithVendormethod work properly. - All event callbacks of your extension work properly through

IMediaExtensionObserver.

- The following performance data:

- The average time the extension needs to process an audio or video frame.

- The maximum amount of memory required by the extension.

- The maximum amount of CPU/GPU consumption required by the extension.

- The following proof of functionality:

-

Extension listing test The Extensions Marketplace is where developers discover your extension. In the Marketplace, each extension has a product listing that provides detailed information such as feature overview and implementation guides. Before making your extension listing publicly accessible, the best practice is to see how everything looks and try every function in a test environment.

-

Write the integration document for your extension

The easier it is for other developers to integrate your extension, the more it will be used. Follow the guidelines and create the best Integration guide for your extension

-

Apply for testing

To apply for access to the test environment, contact Agora and provide the following:

- Your extension package

- Extension listing assets, including:

- Your company name

- Your public email address

- The Provisioning API endpoints

- The Usage and Billing API endpoints

- Your draft business model or pricing plan

- Your support page URL

- Your official website URL

- Your implementation guides URL

-

Test your extension listing

Once your application is approved, Agora publishes your extension in the test environment and sends you an e-mail.

To test if everything works properly with your extension in the Marketplace, do the following:

- Activate and deactivate your extension in an Agora project, and see whether the Provisioning APIs work properly.

- Follow your implementation guides to implement your extension in an Agora project, and see whether you need to update your documentation.

- By the end of the month, check the billing information and see whether the Usage and Billing APIs work properly.

Now you are ready to submit your extension for final review by Agora. You can now Publish Your Extension.

Reference

This section contains content that completes the information on this page, or points you to documentation that explains other aspects to this product.

Sample project

Agora provides an Android sample project agora-simple-filter for developing audio and video filter extensions.

API reference

The classes used to create and encapsulate filters are:

- IExtensionVideoFilter: Implement receiving, processing, and delivering video data.

IExtensionProvider: encapsulate yourIExtensionVideoFilterimplementation into an extension.

IExtensionVideoFilter

Implement receiving, processing, and delivering video data.

Methods include:

getProcessModestartstopgetVideoFormatWantedadaptVideoFramependVideoFramedeliverVideoFramesetPropertygetProperty

getProcessMode

Sets how the SDK communicates with your video filter extension. The SDK triggers this callback first when loading the extension. After receiving the callback, you need to return mode and independent_thread to specify how the SDK communicates with the extension.

| Parameter | Description |

|---|---|

| mode | The mode for transferring video frames between the SDK and extension. You can set it to the following values:adaptVideoFrame.pendVideoFrame, and the extension returns processed video frames to the SDK through deliverVideoFrame. |

| independent_thread | Whether to create an independent thread for the extension: |

You can set the value of mode and independent_thread as follows:

- If your extension uses complicated YUV algorithm, Agora recommends setting

modetoAsyncandindependent_threadtofalse;if your extension does not use complicated YUV algorithm, Agora recommends settingmodeto Syncandindependent_threadtofalse. - If your extension uses OpenGL for data processing, Agora recommends setting

modetoSyncandindependent_threadtotrue.

start

The SDK triggers this callback after the video transmission pipeline starts. You can initialize OpenGL in this callback.

The SDK also passes a Control object to the extension in this method. The Control class provides methods for the extension to interact with the SDK. You can implement the methods in the Control class based on your actual needs:

stop

The SDK triggers this callback before the video transmission pipeline stops. You can release OpenGL in this callback.

getVideoFormatWanted

Sets the type and format of the video frame sent to your extension. The SDK triggers this callback before sending a video frame to the extension. In the callback, you need to specify the type and format for the frame. You can change the type and format of subsequent frames when you receive the next callback.

| Parameter | Description |

|---|---|

| type | The type of the video frame. Currently you can only set it to RawPixels, which means raw data. |

| format | The format of the video frame. You can set it to the following values:Unknown: An unknown format.I420: The I420 format.I422: The I420 format.``NV21: The NV21 format.NV12: The NV12 format.RGBA: The RGBA format.ARGB: The AGRB format.BGRA: The BGRA format. |

adaptVideoFrame

Adapts the video frame. In synchronous mode (mode is set to Sync), the SDK and extension transfer video frames through this method. By calling this method, the SDK sends video frames to the extension with in, and the extension returns the processed frames with out.

Parameters

| Parameter | Description |

|---|---|

| in | An input parameter. The video frame to be processed by the extension. |

| out | An output parameter. The processed video frame. |

Returns

The result of processing the video frame:

- Success: The extension has processed the frame successfully.

- ByPass: The extension does not process the frame and passes it to the subsequent link in the filter chain.

- Drop: The extension discards the frame.

pendVideoFrame

Submits the video frame. In asynchronous mode (mode is set to Async), the SDK submits the video frame to the extension through this method. After calling this method, the extension must return the processed video frame through deliverVideoFrame in the Control class.

Parameters

| Parameter | Description |

|---|---|

| frame | The video frame to be processed by the extension. |

Returns

The result of processing the video frame:

- Success: The extension has processed the frame successfully.

- ByPass: The extension does not process the frame and passes it to the subsequent link in the chain.

- Drop: The extension discards the frame.

setProperty

Sets the property of the video filter extension. When an app client calls setExtensionProperty, the SDK triggers this callback. In the callback, you need to return the extension property.

| Parameter | Description |

|---|---|

| key | The key of the property. |

| buf | The buffer of the property in the JSON format. You can use the open source nlohmann/json library for the serialization and deserialization between the C++ struct and the JSON string. |

| buf_size | The size of the buffer. |

getProperty

Gets the property of the video filter extension. When the app client calls getExtensionProperty, the SDK calls this method to get the extension property.

| Parameter | Description |

|---|---|

| key | The key of the property. |

| property | The pointer to the property. |

| buf_size | The size of the buffer. |

IExtensionProvider

Encapsulate your IExtensionVideoFilter implementation into an extension.

Methods include:

enumerateExtensions

Enumerates your extensions that can be encapsulated. The SDK triggers this callback when loading the extension. In the callback, you need to return information about all of your extensions that can be encapsulated.

| Parameter | Description |

|---|---|

extension_list | Extension information, including extension type and name. For details, see the definition of ExtensionMetaInfo. |

extension_count | The total number of the extensions that can be encapsulated. |

The definition of ExtensionExtensionMetaInfo is as follows:

If you specify VIDEO_PRE_PROCESSING_FILTER or VIDEO_POST_PROCESSING_FILTER as EXTENSION_TYPE, the SDK calls the createVideoFilter method after the customer creates the IExtensionVideoProvider object when initializing RtcEngine.

createVideoFilter

Creates an video filter. You need to pass the IExtensionVideoFilter instance in this method.

After the IExtensionVideoFilter instance is created, the extension processes video frames with methods in the IExtensionVideoFilter class.