Content moderation

Delivering a safe and appropriate chat environment to your users is essential. Chat gives you multiple options to intelligently moderate the chat content and handle inappropriate user behavior.

- The following message management tools are available:

- Message reporting.

- Message moderation, including text and image moderation, profanity filtering, and domain filtering. Message moderation can be applied globally or to selected chat groups and chat rooms.

Prerequisites

- You have created a valid Agora developer account.

- Moderation is not enabled by default. To use this feature, you need to subscribe to the Pro or Enterprise pricing plan and enable it in Agora Console.

Add-on fees are incurred if you use this feature. See Pricing for details.

Enable the moderation feature

-

Log in to Agora Console.

-

In the left navigation menu, click Project Management > Config for the project for which you want to enable the moderation feature > Config of Chat > Features > Overview.

-

On the Chat Overview page, turn on the switch of a specific moderation option, as shown in the following figure:

Message management

The following table summarizes the message management tools provided by Chat:

| Function | Description |

|---|---|

| Message reporting | The reporting API allows end-users to report objectionable messages directly from their applications. Moderators can view the report items on Agora Console and process the messages and message senders. |

| Text and image moderation | This feature is based on a third-party machine learning model and is used to automatically moderate text and image messages and block questionable content. |

| Profanity filter | The profanity filter can detect and filter out profanities contained words in messages according to configurations you set. |

| Domain filter | The domain filter can detect and filter out certain domains contained in messages according to configurations you set. |

Implement the Reporting Feature

The Chat SDK provides a message reporting API, which allows end-users to report objectionable messages directly from their applications. After the Chat server receives the report, it stores the report and displays it on Agora Console. Moderators can view the report items on the Agora Console and process the messages and message senders according to their content policy.

To use the reporting feature, refer to the following code sample to call the reporting API:

After a user reports a message from the application, moderators can check and deal with the report on Agora Console:

-

To enter the Message Report page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Message Report > History, as shown in the following figure:

-

On the Message Report page, moderators can filter the message report items by time period, session type, or handling method. They can also search for a specific report item by user ID, group ID, or chat room ID. For the reports, Chat supports two handling methods: withdrawing the message or asking the sender to process the message.

Text and image moderation

Introduction

Powered by Microsoft Azure Moderator, Agora Chat's text and image moderation can scan messages for illegal and inappropriate text and image content and mark the content for moderation. Microsoft Azure Moderator uses the following four categories to moderate the message:

- Adult: The content may be considered sexually explicit or adult in certain situations.

- Mature: The content may be considered sexually suggestive or mature in certain situations.

- Offensive: The content may be considered offensive or abusive.

- Personal Data: The content contains personally identifiable information, which includes email address, US mailing address, IP address, and a US phone number. Please note that content moderation will determine an input to be a US mailing address only if the input contains all elements of a mailing address (e.g., zip code, state abbreviation).

After enabling the text and image moderation feature on Agora Console, you can set a sensitivity threshold for each moderation category. If the sensitivity threshold is exceeded, Agora Chat will handle the message as specified in the moderation rule. A greater sensitivity indicates more strict review and that Agora Chat will block more inappropriate content. You are advised to test the moderation rule to check whether the sensitivity threshold you set meets your requirements.

You can also impose a penalty on users who reach the violation limit within a time period. The moderation penalties include the following:

-

Penalties at the app level or in one-to-one chat:

- Ban: If the user is banned, he will be forced to go offline immediately and cannot log in to Agora Chat. The banned user cannot log in again until he or she is unbanned. For example, if a user sends inappropriate content 5 times within 1 minute, he or she will be banned. Once a user is banned, the user state is changed to

Blockedon the Users page under Operation Management. You can click Unblock in the Action column or call the RESTful API to unblock the user. - Force Offline: The user is forced to go offline and needs to log in again to use Agora Chat normally.

- Delete: The user is removed. If the user is the group owner or chat room owner, the system will delete the corresponding group and chat room at the same time.

- Ban: If the user is banned, he will be forced to go offline immediately and cannot log in to Agora Chat. The banned user cannot log in again until he or she is unbanned. For example, if a user sends inappropriate content 5 times within 1 minute, he or she will be banned. Once a user is banned, the user state is changed to

-

Penalties for users in groups and chat rooms:

- Add to Block list: If the user is added to the block list of the group or chat room, he cannot no longer view or receive the messages of the group or chat room.

- Kick Out: The user is kicked out of the group or chat room. After the user is removed from the group, he or she will be removed from the message threads he or she joined in the group.

- Mute: After the user is muted, he or she will not be able to send messages in the group or chat room. If a user is muted in a group, he or she cannot send messages in the message threads he or she joined in the group.

To see how moderation works and determine which moderation settings suit your needs, you can test different text strings and images on Agora Console.

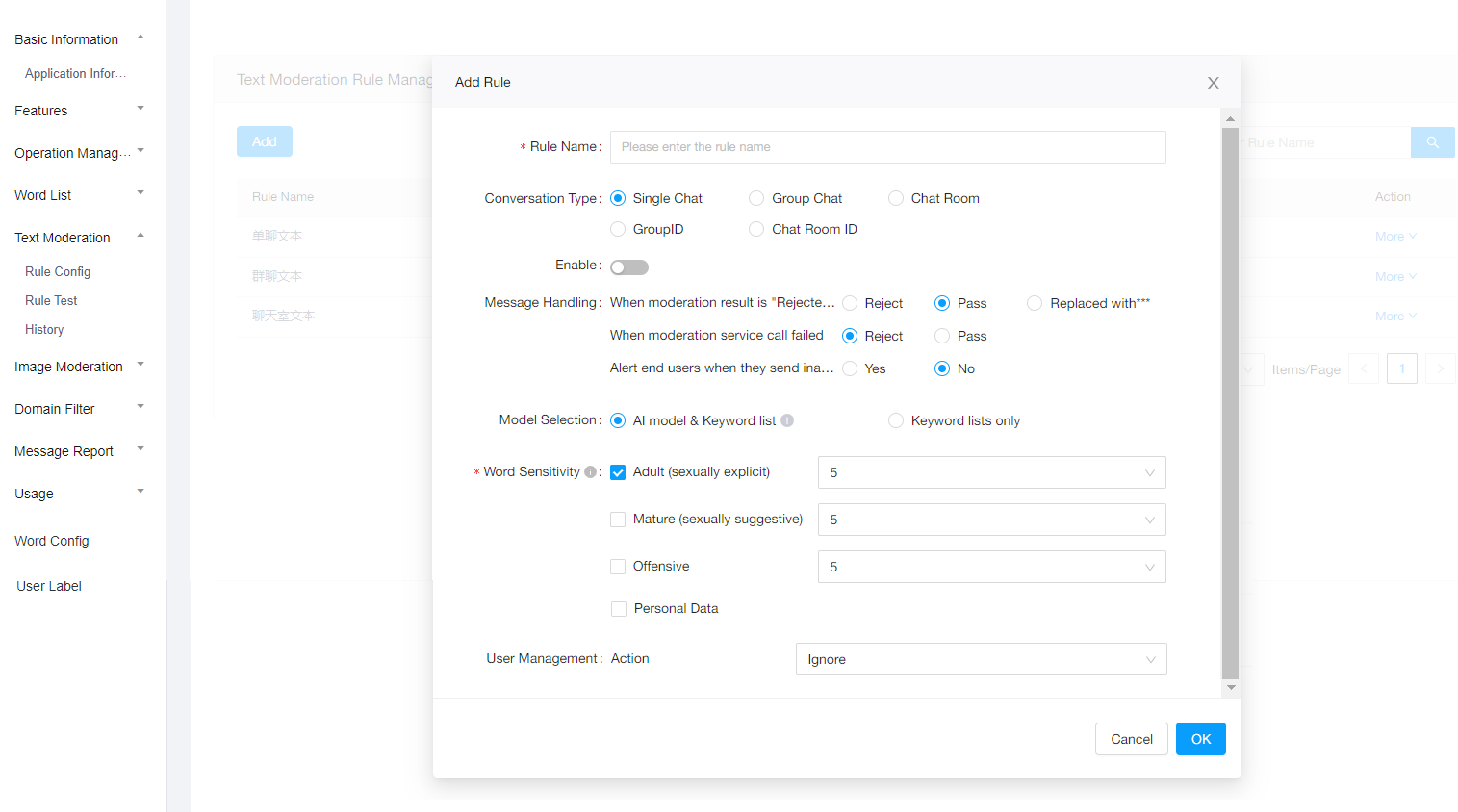

Create a moderation rule

Taking a one-to-one chat text as an example, follow these steps to create a text moderation rule:

-

To enter the Rule Config page, in the left navigation menu, click Project Management > Config for the project for which you want > Config of Chat > Text Moderation or Image Moderation > Rule Config, as shown in the following figure:

-

To create a text moderation rule, click Add:

The following table lists the fields that a text moderation rule supports:

Field Description Rule Name The rule name. Conversation Type The moderation scope, which can be one of the following: a one-to-one chat, chat group or chat room, chat groups or chat rooms. If you set a rule for a specific chat group or room, the global moderation rules for chat groups and rooms are overwritten. Enable Determine whether to turn a rule on or off. Message Handling - When the moderation result is Rejected, you can set the action on the moderated message to one of the following options:

- Blocks the message. Agora recommends using this setting for online messages after you fully test this moderation rule and ensure it suits your needs.

- (Default) Sends the message. Agora recommends using this setting when you are testing this moderation rule and do not want this rule to affect online messages.

- Replaces the message with ***.

- When the moderation action fails (for example, the timeout period of the text audit interface is 200 milliseconds; if no result is returned within 200 milliseconds, this moderation action times out), the message processing policy can be set to one of the following options:

- Blocks the message.

- (Default) Sends the message.

- If the action on the moderated message is set as Blocks the message, the

508, MESSAGE_EXTERNAL_LOGIC_BLOCKEDerror is returned after the message is blocked. You can set whether to indicate this error in the application. If you choose to indicate this error in the application, a red exclamation mark is displayed before the blocked message.

Model Selection Set whether to use AI alongside your keyword lists. Word Sensitivity Set the threshold for each moderation category. User management Impose a penalty on users who reach the violation limit within a time period. The moderation penalties include the following: banning the user, forcing the user to go offline, or deleting the user. - When the moderation result is Rejected, you can set the action on the moderated message to one of the following options:

-

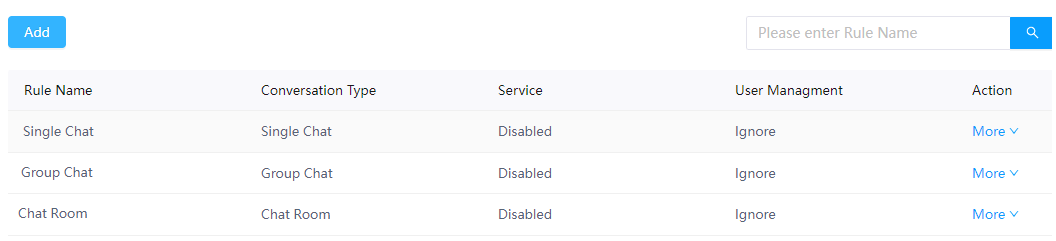

After creating a rule, you can edit or delete the rule:

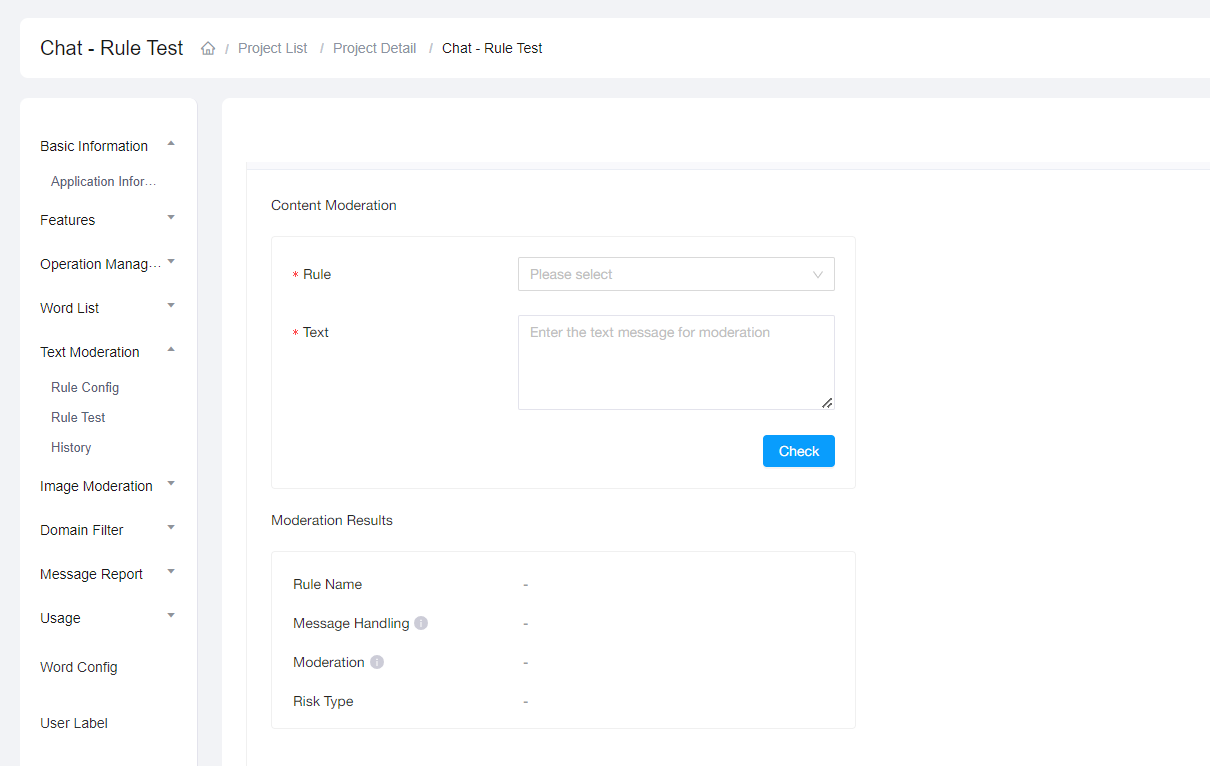

Conduct a text or image moderation rule test

-

To enter the Rule Test page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Text Moderation or Image Moderation > Rule Test, as shown in the following figure:

-

Select a rule, fill in the text to moderate, and click Check to test the rule. The moderation result is displayed on the same page.

Profanity filter

The profanity filter can detect and filter out profanities contained words in messages according to configurations you set.

Follow these steps to specify a profanity filter configuration:

-

To enter the Rule Config page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Word List > Rule Config, as shown in the following figure:

-

On the Rule Config page, you can add or delete words and determine which filtering method to apply to messages that contain the specified keywords. You can replace the word with *** or simply not send the word. You can add up to 10,000 words to the list. Contact support@agora.io if you need to extend this limit to 100,000 words.

Domain filter

The domain filter can detect and filter out certain domains contained in messages according to configurations you set.

Follow these steps to specify a domain filter configuration:

-

To enter the Rule Config page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Domain Filter > Rule Config, as shown in the following figure:

-

To create a domain filtering rule, click Add:

The following table lists the fields that a domain filtering rule supports:

Field Description Rule name The rule name. Conversation type The moderation scope, which can be one of the following: a one-to-one chat, chat group or chat room, chat groups or chat rooms. If you set a rule for a specific chat group or room, the global moderation rules for chat groups and rooms are overwritten. Enable Determines whether to turn a rule on or off. Message handling - Blocks messages containing the domain.

- Only allows messages containing the domain to pass.

- Replaces the domain in the message with ***.

- Takes no action on the moderated message.

Domain name Adds a domain to the rule. User management Imposes a penalty on users who reach the violation limit within a time period. The moderation penalties include the following: banning the user, forcing the user to go offline, or deleting the user. -

After creating a rule, you can edit or delete the rule:

Check the moderation history

You can check the history of the text moderation, image moderation, profanity filter, and domain filter on Agora Console. You can filter the moderation items by the time period, session type, or moderation result. You can also search for a specific item by the sender ID or receiver ID.

User management

You can impose a penalty on users for repeated violations. The penalties can be applied as a global application setting, or only to a specific chat group or room. The following table lists all the user moderation options that Chat supports:

| User moderation options | Actions | Description |

| Global actions on users | Banning a user | A banned user immediately goes offline and is not allowed to log in again until the ban is lifted. |

| Forcing a user to go offline | Users who are forced to go offline need to log in again to use the Chat service. | |

| Deleting a user | If the deleted user is the owner of a chat group or chat room, the group or room is also deleted. | |

| Chat group management | Muting a user in a chat group | A muted user cannot send messages in this chat group until unmuted. |

| Muting all users in a chat group | Members in a muted group cannot send messages until the muted state is lifted. | |

| Managing a group blocklist | Users who are added to the group blocklist are removed from the group immediately and cannot join the group again. | |

| Removing a user from a chat group | The removed user can no longer receive the messages in this group until rejoining the group. | |

| Chat room management | Muting a user in a chat room | A muted user cannot send messages in this chat room until unmuted. |

| Muting all users in a chat room | Members in a muted room cannot send messages until the muted state is lifted. | |

| Managing a room blocklist | Users who are added to the room blocklist are removed from the room immediately and cannot join the room again. | |

| Removing a user from a chat room | The removed user can no longer receive the messages in this room until rejoining the room. |

Take global actions on users

-

To enter the User Management page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Operation Management > User, as shown in the following figure:

-

To take action on a user (banning a user, deleting a user, or forcing a user to go offline), search for the user ID, and click More:

Take actions on chat group members

-

To enter the Chat Group Management page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Operation Management > Group, as shown in the following figure:

-

To take action on a group member (removing a member from the group or adding a user to the group blocklist), search for the group ID, and click More:

-

You can also click the group ID to enter the group's moderation dashboard, where you can manage the group info, group members, and messages in real-time:

To use this feature, you need to enable it on the Features > Overview page.

Take actions on chat room members

-

To enter the Chat Room Management page, in the left navigation menu, click Project Management > Config for the project that you want > Config of Chat > Chat Room Management, as shown in the following figure:

-

To take action on a room member (removing a member from the room or adding a user to the room blocklist), search for the room ID, and click More:

-

You can also click the room ID to enter the room's moderation dashboard, where you can manage the room info, room members, and messages in real-time:

To use this feature, you need to enable it on the Features > Overview page.